I have recently encountered a requirement where we had to integrate a legacy Document Management system with Amazon in order to support a Mobile-Field Worker application. The core requirement is that when a document reaches a certain state within the Document Management System, we need to publish this file to an S3 instance where it can be accessed from a mobile device. We will do so using a RESTful PUT call.

Introduction to Amazon S3 SDK for .Net

Entering this solution I knew very little about Amazon S3. I did know that it supported REST and therefore felt pretty confident that BizTalk 2013 could integrate with it using the WebHttp adapter.

The first thing that I needed to do was to create a Developer account on the Amazon platform. Once I created my account I then downloaded the Amazon S3 SDK for .Net. Since I will be using REST technically this SDK is not required however there is a beneficial tool called the AWS Toolkit for Microsoft Visual Studio. Within this toolkit we can manage our various AWS services including our S3 instance. We can create, read, update and delete documents using this tool. We can also use it in our testing to verify that a message has reached S3 successfully.

Another benefit of downloading the SDK is that we can use the managed libraries to manipulate S3 objects to better understand some of the terminology and functionality that is available. Another side benefit is that we can fire up Fiddler while we are using the SDK and see how Amazon is forming their REST calls, under the hood, when communicating with S3

Amazon S3 Accounts

When you sign up for an S3 account you will receive an Amazon Key ID and a Secret Access Key. These are two pieces of data that you will need in order to access your S3 services. You can think of these credentials much like the ones you use when accessing Windows Azure Services.

BizTalk Solution

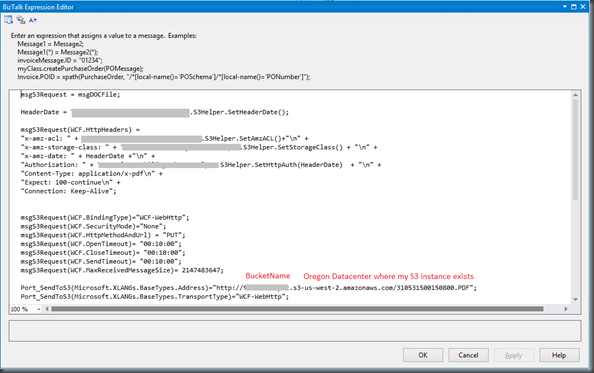

To keep this solution as simple as possible for this Blog Post, I have stripped some of the original components of the solution so that we can strictly focus on what is involved in getting the WebHttp Adapter to communicate with Amazon S3.

For the purpose of this blog post the following events will take place:

- We will receive a message that will be of type: System.Xml.XmlDocument. Don’t let this mislead you, we can receive pretty much any type of message using this message type including text documents, images and pdf documents.

- We will then construct a new instance of the message that we just received in order to manipulate some Adapter Context properties. You may now be asking – Why do I want to manipulate Adapter Context properties? The reason for this is that since we want to change some of our HTTP Header properties at runtime we therefore need to use a Dynamic Send Port as identified by Ricardo Marques.

The most challenging part of this Message Assignment Shape was populating the WCF.HttpHeaders context property. In C# if you want to populate headers you have a Header collection that you can populate in a very clean manner:

headers.Add("x-amz-date", httpDate);

However, when populating this property in BizTalk it isn’t as clean. You need to construct a string and then append all of the related properties together. You also need to separate each header attribute onto a new line by appending “\n” .

Tip: Don’t try to build this string in a Helper method. \n characters will be encoded and the equivalent values will not be accepted by Amazon so that is why I have built out this string inside an Expression Shape.

After I send a message(that I have tracked by BizTalk) I should see an HTTP Header that looks like the following:

<Property Name="HttpHeaders" Namespace="http://schemas.microsoft.com/BizTalk/2006/01/Adapters/WCF-properties" Value=

"x-amz-acl: bucket-owner-full-control

x-amz-storage-class: STANDARD

x-amz-date: Tue, 10 Dec 2013 23:25:43 GMT

Authorization: AWS <AmazonKeyID>:<EncryptedSignature>

Content-Type: application/x-pdf

Expect: 100-continue

Connection: Keep-Alive"/>For the meaning of each of these headers I will refer you to the Amazon Documentation. However, the one header that does warrant some additional discussion here is the Authorization header. This is how we authenticate with the S3 Service. Constructing this string requires some additional understanding. To simplify the population of this value I have created the following helper method which was adopted from the following post on StackOverflow:

public static string SetHttpAuth(string httpDate)

{

string AWSAccessKeyId = "<your_keyId>";

string AWSSecretKey = "<your_SecretKey>";string AuthHeader = "";

string canonicalString = "PUT\n\napplication/x-pdf\n\nx-amz-acl:bucket-owner-full-control\nx-amz-date:" + httpDate + "\nx-amz-storage-class:STANDARD\n/<your_bucket>/310531500150800.PDF";

// now encode the canonical string

Encoding ae = new UTF8Encoding();

// create a hashing object

HMACSHA1 signature = new HMACSHA1();

// secretId is the hash key

signature.Key = ae.GetBytes(AWSSecretKey);

byte[] bytes = ae.GetBytes(canonicalString);

byte[] moreBytes = signature.ComputeHash(bytes);

// convert the hash byte array into a base64 encoding

string encodedCanonical = Convert.ToBase64String(moreBytes);

// finally, this is the Authorization header.

AuthHeader = "AWS " + AWSAccessKeyId + ":" + encodedCanonical;return AuthHeader;

}The most important part of this method is the following line(s) of code:

string canonicalString = "PUT\n\napplication/x-pdf\n\nx-amz-acl:bucket-owner-full-control\nx-amz-date:" + httpDate + "\nx-amz-storage-class:STANDARD\n/<your_bucket>/310531500150800.PDF";

The best way to describe what is occurring is to borrow the following from the Amazon documentation.

The

Signatureelement is the RFC 2104HMAC-SHA1 of selected elements from the request, and so theSignaturepart of the Authorization header will vary from request to request. If the request signature calculated by the system matches theSignatureincluded with the request, the requester will have demonstrated possession of the AWS secret access key. The request will then be processed under the identity, and with the authority, of the developer to whom the key was issued.Essentially we are going to build up a string that reflects that various aspects of our REST call (Headers, Date, Resource) and then create a Hash using our Amazon secret. Since Amazon is aware of our Secret they can decrypt this payload and see if it matches our actual REST call. If it does – we are golden. If not, we can expect an error like the following:

A message sent to adapter "WCF-WebHttp" on send port "SendToS3" with URI http://<bucketname>.s3-us-west-2.amazonaws.com/ is suspended.

Error details: System.Net.WebException: The HTTP request was forbidden with client authentication scheme 'Anonymous'.

<?xml version="1.0" encoding="UTF-8"?>

<Error><Code>SignatureDoesNotMatch</Code><Message>The request signature we calculated does not match the signature you provided. Check your key and signing method.</Message><StringToSignBytes>50 55 54 0a 0a 61 70 70 6c 69 63 61 74 69 6f 6e 2f 78 2d 70 64 66 0a 0a 78 2d 61 6d 7a 2d 61 63 6c 3a 62 75 63 6b 65 74 2d 6f 77 6e 65 72 2d 66 75 6c 6c 2d 63 6f 6e 74 72 20 44 65 63 20 32 30 31 33 20 30 34 3a 35 37 3a 34 35 20 47 4d 54 0a 78 2d 61 6d 7a 2d 73 74 6f 72 61 67 65 2d 63 6c 61 73 73 3a 53 54 41 4e 44 41 52 44 0a 2f 74 72 61 6e 73 61 6c 74 61 70 6f 63 2f 33 31 30 35 33 31 35 30 30 31 35 30 38 30 30 2e 50 44 46</StringToSignBytes><RequestId>6A67D9A7EB007713</RequestId><HostId>BHkl1SCtSdgDUo/aCzmBpPmhSnrpghjA/L78WvpHbBX2f3xDW</HostId><SignatureProvided>SpCC3NpUkL0Z0hE9EI=</SignatureProvided><StringToSign>PUTapplication/x-pdf

x-amz-acl:bucket-owner-full-control

x-amz-date:Thu, 05 Dec 2013 04:57:45 GMT

x-amz-storage-class:STANDARD

/<bucketname>/310531500150800.PDF</StringToSign><AWSAccessKeyId><your_key></AWSAccessKeyId></Error>Tip: Pay attention to these error messages as they really give you a hint as to what you need to include in your “canonicalString”. I discounted these error message early on and didn’t take the time to really understand what Amazon was looking for.

For completeness I will include the other thresshelper methods that are being used in the Expression Shape. For my actual solution I have included these in a configuration store but for the simplicity of this blog post I have hard coded them.

public static string SetAmzACL()

{

return "bucket-owner-full-control";

}public static string SetStorageClass()

{

return "STANDARD";

}public static string SetHeaderDate()

{

//Use GMT time and ensure that it is within 15 minutes of the time on Amazon’s Servers

return DateTime.UtcNow.ToString("ddd, dd MMM yyyy HH:mm:ss ") + "GMT";

} -

The next part of the Message Assignment shape is setting the standard context properties for WebHttp Adapter. Remember since we are using a Dynamic Send Port we will not be able to manipulate these values through the BizTalk Admin Console.

msgS3Request(WCF.BindingType)="WCF-WebHttp";

msgS3Request(WCF.SecurityMode)="None";

msgS3Request(WCF.HttpMethodAndUrl) = "PUT"; //Writing to Amazon S3 requires a PUT

msgS3Request(WCF.OpenTimeout)= "00:10:00";

msgS3Request(WCF.CloseTimeout)= "00:10:00";

msgS3Request(WCF.SendTimeout)= "00:10:00";

msgS3Request(WCF.MaxReceivedMessageSize)= 2147483647;Lastly we need to set the URI that we want to send our message to and also specify that we want to use the WCF-WebHttp adapter.

Port_SendToS3(Microsoft.XLANGs.BaseTypes.Address)="http://<bucketname>.s3-us-west-2.amazonaws.com/310531500150800.PDF";

Port_SendToS3(Microsoft.XLANGs.BaseTypes.TransportType)="WCF-WebHttp";Note: the last part of my URI 310531500150800.PDF represents my Resource. In this case I have hardcoded a file name. This is obviously something that you want to make dynamic, perhaps using the FILE.ReceivedFileName context property.

- Once we have assembled our S3 message we will go ahead and send it through our Dynamic Solicit Response Port. The message that we are going to send to Amazon and Receive back is once again of type System.Xml.XmlDocument

- One thing to note is that when you receive a response back from Amazon is that it won’t actually have a message body (this is inline with REST). However even though we receive an empty message body, we will still find some valuable Context Properties. The two properties of interest are:

InboundHttpStatusCode

InboundHttpStatusDescription

- The last step in the process is to just write our Amazon response to disk. But, as we have learned in the previous point is that our message body will be empty but does give me an indicator that the process is working (in a Proof of Concept environment).

Overall the Orchestration is very simple. The complexity really exists in the Message Assignment shape.

Testing

Not that watching files move is super exciting, but I have created a quick Vine video that will demonstrate the message being consumed by the FILE Adapter and then sent off to Amazon S3.

Conclusion

This was a pretty fun and frustrating solution to put together. The area that caused me the most grief was easily the Authorization Header. There is some documentation out there related to Amazon “PUT”s but each call is different depending upon what type of data you are sending and the related headers. For each header that you add, you really need to include the related value in your “canonicalString”. You also need to include the complete path to your resource (/bucketname/resource) in this string even though the convention is a little different in the URI.

Also it is worth mentioning that /n Software has created a third party S3 Adapter that abstracts some of the complexity in this solution. While I have not used this particular /n Software Adapter, I have used others and have been happy with the experience. Michael Stephenson has blogged about his experiences with this adapter here.

No comments:

Post a Comment